Accelerating AI Development with NVIDIA TAO Toolkit and MediaTek NeuroPilot SDK

Introduction

AI developers constantly seek efficient ways to optimize and deploy models on edge devices. The integration of NVIDIA TAO Toolkit with MediaTek NeuroPilot SDK offers a streamlined approach to developing AI solutions that leverage MediaTek’s NPU for accelerated inference. This combination simplifies model training, optimization, and deployment, making AI more accessible to developers across various industries.

Understanding NVIDIA TAO Toolkit

The NVIDIA TAO Toolkit is designed to simplify AI model training through transfer learning. Built on TensorFlow and PyTorch, it enables developers to fine-tune pre-trained models with minimal data, reducing the complexity of training from scratch. Key features include:

- Pre-trained models: Over 100 ready-to-use models for vision AI applications

- Automated tuning: Optimizes AI models for inference without requiring deep AI expertise

- Efficient deployment: Converts models into formats compatible with edge devices

MediaTek NeuroPilot SDK: AI Optimization for Edge Devices

The MediaTek NeuroPilot SDK is a powerful AI framework that enables efficient execution of AI models on MediaTek NPUs. It provides:

- Seamless model conversion: Converts ONNX models to TFLite for optimized inference

- Hardware acceleration: Leverages MediaTek’s MDLA (Deep Learning Accelerator) for high-performance AI processing

- Broad compatibility: Supports multiple AI frameworks, including ONNX Runtime and PyTorch

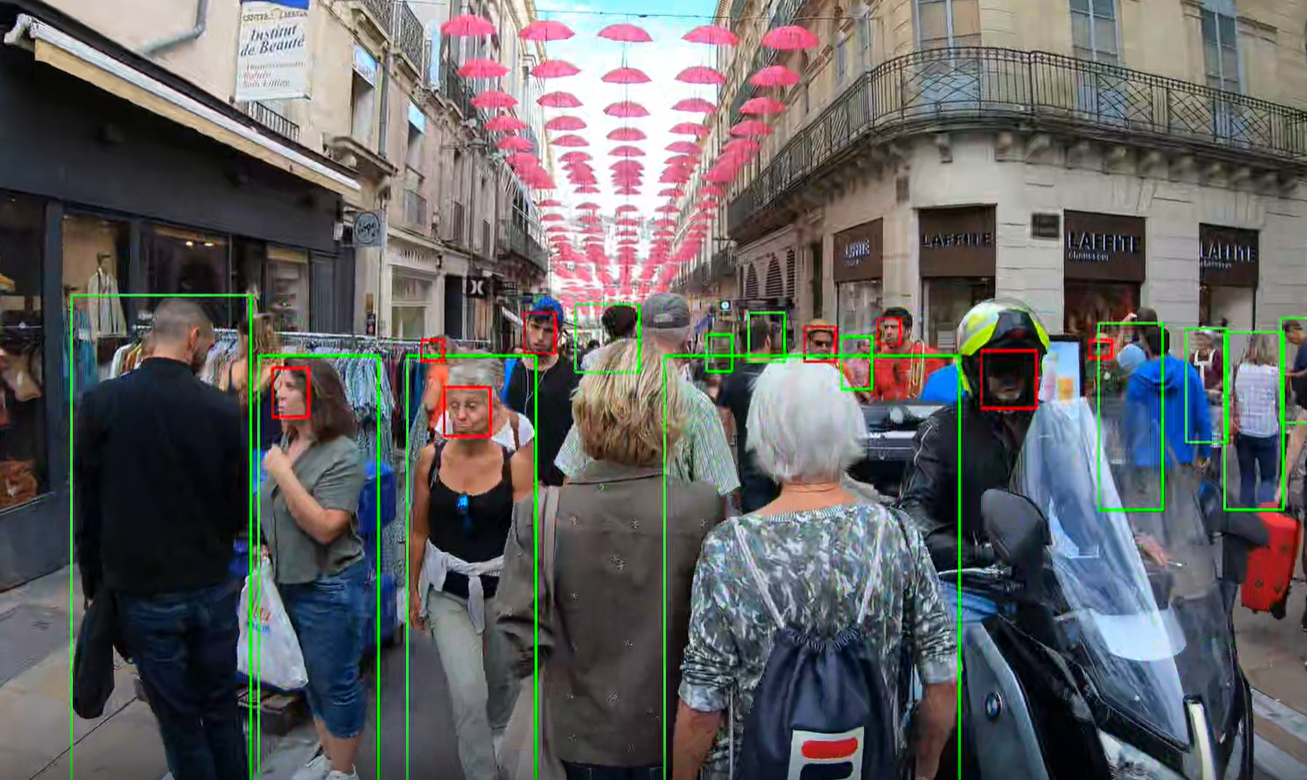

High accuracy pre-trained TAO models deployed on Genio platforms

High accuracy pre-trained TAO models deployed on Genio platforms

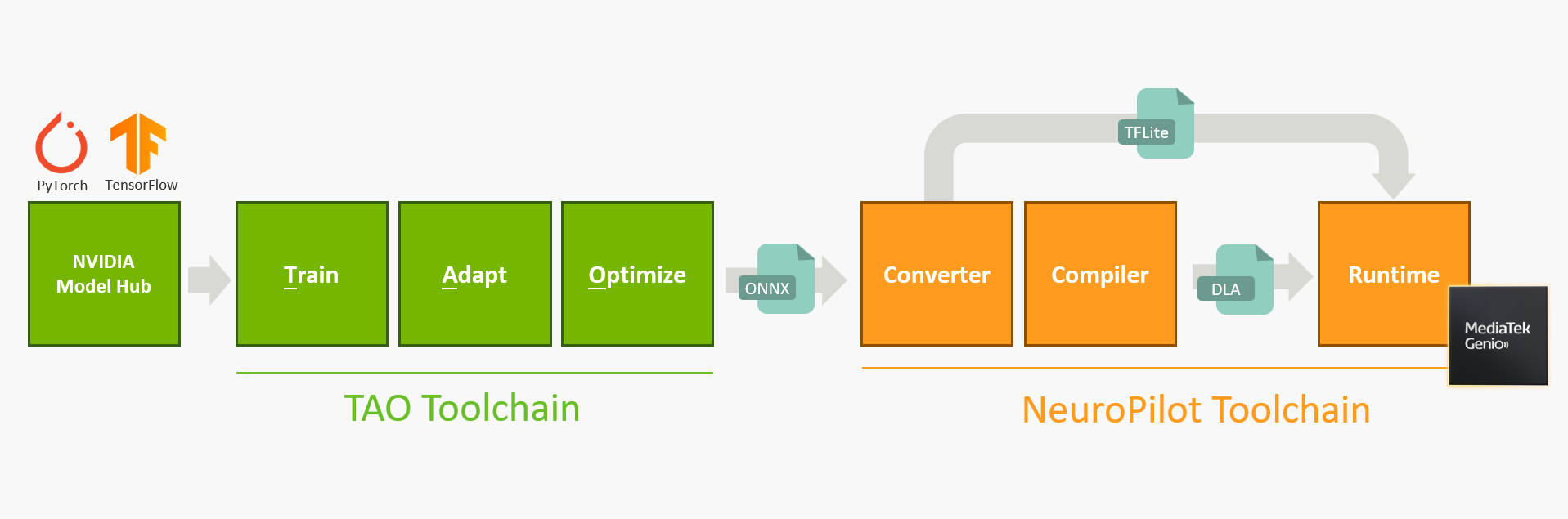

How TAO Toolkit and NeuroPilot SDK Work Together

The integration of NVIDIA TAO Toolkit with MediaTek NeuroPilot SDK creates a powerful AI development pipeline:

- Model Training with TAO Toolkit:

- Developers fine-tune pre-trained models using transfer learning.

- Models are optimized for inference throughput.

- Conversion to Edge-Compatible Format:

- TAO-trained models are converted to ONNX format.

- NeuroPilot SDK seamlessly transforms ONNX models into TFLite or deploys the models with ONNX Runtime on MediaTek platforms.

- Optimized Execution on MediaTek NPU:

- The MDLA accelerator ensures efficient AI inference.

- Developers can deploy AI applications across smart retail, healthcare, transportation, and IoT.

Streamlined fine tune, optimize, covert, deploy pipeline

Streamlined fine tune, optimize, covert, deploy pipeline

Benefits for AI Developers

- Reduced development time: pre-trained models and automated tuning accelerate AI deployment

- Optimized performance: NeuroPilot SDK ensures efficient execution on MediaTek NPUs

- Scalability: Supports AI applications across various industries, from smart cities to industrial IoT

Conclusion

The integration of NVIDIA TAO Toolkit with MediaTek NeuroPilot SDK empowers AI developers to create high-performance AI solutions with minimal complexity. By leveraging pre-trained models, automated tuning, and hardware acceleration, developers can rapidly deploy AI applications optimized for MediaTek’s NPU.

For more details, visit MediaTek’s TAO integration and NVIDIA TAO documentation.

* PyTorch, the PyTorch logo and any related marks are trademarks of The Linux Foundation

* TensorFlow, the TensorFlow logo and any related marks are trademarks of Google Inc